XML – Why it should be the “format of record”

Kaveh Bazargan

Everyone has heard of XML, the “geeky” format that almost all scholarly publishers (rightly) demand from their typesetters and then archive for future use. In this short post I want to give reasons why XML should be considered the most important format (more important than PDF or HTML) and how publishers can ensure what they are archiving is 100% accurate.

Form and content

It is important to appreciate the difference between “form” and “content” of, say, a journal article. Content is just a complete “description” of what is being published, with no regard to what it “looks like”. XML is in effect a high level description of this content. So aspects such as type size, page width, and line spacing have (or should have) no place in XML. XML is not designed to be read directly, but needs to be rendered into a human readable form.

Form, on the other hand, refers to the style or the rendering of the content, e.g. PDF, HTML, or Epub that show the content in different ways, sometimes according to the preferences of the reader.

Why it is useful to separate form from content

To take a very simple example, let’s consider the word “very” that needs to be emphasized in a sentence. In the PDF it might look like:

…this is a very important concept…

In the HTML, perhaps:

…this is a very important concept…

Both successfully convey the meaning of emphasis but in different styles. On the other hand the XML is, or should be, agnostic as to the look of the word “very”, and is coded something like:

…this is a <em>very</em> important concept…

So it is simply conveying the concept of emphasis, not the rendering. We can think of any number of “renderings”, each conveying the concept of emphasis, but all derived from the XML.

The very simple example given above can, of course be extended to the complete content of a publication, with the XML being used to “tag” the content logically, and the rendered formats converting those tags to visual (or audible) content.

Other advantages of XML

Future-proofed content

Keeping content logically in a well structured XML file means that a publisher’s content is effectively future-proofed. Suppose that several years from now a new format comes along, say a new eBook format. If the XML is correctly tagged, then it should be possible to write a one-time filter that converts all the XML files into the new format, with no manual work involved.

Data mining

XML is in effect data. So data mining can be done with full confidence. If data mining is attempted on HTML or PDF files, say, there might be a need for Artificial Intelligence in order to “reverse engineer” the format to get to the original content. No such reverse engineering or “guessing” is needed with XML.

Accessible content

Well structured and granular XML files are they key to making content accessible. Suppose we need to have the content automatically read aloud for the blind or visually impaired. A rendered format would be ambiguous, because the screen reader would have to guess that italic means emphasis, as opposed, say, to a mathematical variable. But the XML is unambiguous as to what is emphasis. The screen reader might, for instance, raise the volume of the audio to signify this emphasis.

Potential nightmare of multiple formats

In the old days of scholarly publishing, there was only one “format”, namely the print, and life was simple! But now the content is almost always disseminated in several formats, most commonly HTML and PDF. And XML is almost always required but is usually not made public. So there are at least three formats that are maintained by a publisher.

We are all familiar with the term “Version of Record” (or VoR). But with so many files maintained, an important question arises as to which should be the definitive Format of Record for a particular publication. To illustrate the issue, suppose that some years after an article has been published, a reader notices an inconsistency between different formats of an article, say the symbol alpha in the HTML appears as a beta in the PDF. They contact the publisher for clarification and suppose, for argument’s sake, the publisher checks the XML and finds that the corresponding symbol is saved as a gamma in the XML. This is a nightmare we should, and can avoid, by nominating a definitive “format of record”. But which format should that be?

Which should be the publisher’s “format of record”?

It is clear from the above example that going from content to form, say from the XML version of “<em>very</em>” to the more readable “very” is logically trivial, but going the other way is next to impossible. So it makes sense that the XML is nominated the definitive format. But here is the catch – the XML is very rarely viewed after an article has been published and is simply archived. In fact it is usually not even published, whereas the HTML and PDF are constantly viewed. So any errors in the XML are likely to be hidden for a long time. How can we have full confidence in the XML that is archived? The answer lies in the workflow and the technology used to produce the various formats. We can look conceptually at how the different formats are created.

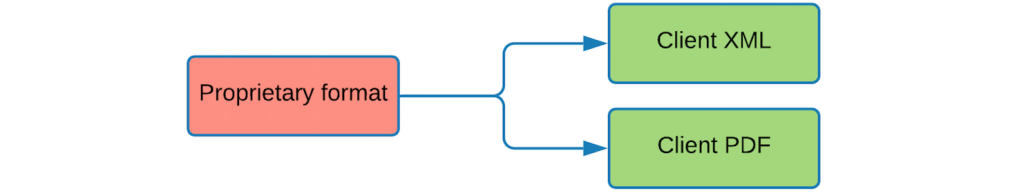

Forked approach

The traditional way in which typesetters produce content in different formats is to use a proprietary typesetting engine that they are familiar with, and then export the formats needed, using conversion filters. For simplicity we’ll just deal with the PDF here.

The “filters” to convert the content into different formats are often designed and configured by vendors, and are as complex as the content they are converting. So STM content will need highly complex conversion filters. And that complexity brings with it the dangers of errors in content. So for the sake of argument let’s take a simple example – suppose that there is a bug in the conversion filter (all software has bugs!) whereby the rare combination xyx is converted correctly in the PDF and other “readable” formats, but is incorrectly converted to xyy in the XML. The XML is never proofread, so this error will lie undetected. And when it is detected, there is no automated way of fixing it, other than by laboriously checking all cases against the author’s original submitted file. Needless to say this is a nightmare scenario best avoided.

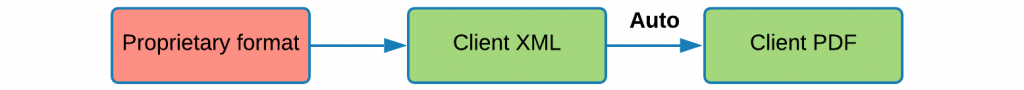

Automated XML-first

The obvious solution is to ensure that the publisher’s XML is created first, and then the readable formats are converted fully automatically from that XML:

Let us take the software bug referred to above. The incorrect conversion of xyx to xyy is no longer hidden in the XML, but will be visible in the PDF and in other readable versions, and it will be immediately by anyone reading these. With fully automated XML-first we can think of the XML being effectively proofread at every stage.

The acid test for XML-first delivery

The phrase “XML-first” is almost a cliché, and the process has been demanded of typesetting vendors for many years. But the devil is in the detail, and the process has to be what we call “pure” XML-first. Unfortunately some vendors are using the term loosely, and that defeats the purpose. I drew attention to this – somewhat lightheartedly – in a presentation at an STM Association meeting. Here are some questions publishers can ask vendors:

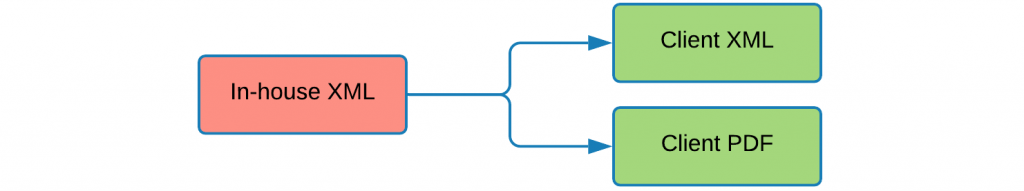

Is the source XML the client XML?

Ideally, the XML that is used to create the final PDF should be the final XML that is delivered to the client, not a generic in-house XML. Or worse still, a proprietary internal file that looks like XML. If the source XML is not the client XML, then we are back to the forking problem again, and the possible conversion bugs.

Can PDF be generated from XML archive only?

With pure XML-first, the published PDF (or other formats) can be created fully automatically from the XML archive (XML and embedded graphics), and with no other content needed. So the XML should be the “typesetting file”. This is a good test for the “purity” of the process.

But what about the final pagination?

A fair question that is often asked is, if the XML does not save any visual information, then how can we ensure that the pagination of the final PDF is correct? What if some manual tweaking is required, e.g. in double column pages, or perhaps to take a word over to the next line? Well, XML provides for “Processing Instructions” (or PIs) specifically for such cases. So during the automated conversion, the PIs are passed to the pagination “engine”, thus ensuring true XML-first workflow as well as beautiful pagination.

Final thoughts

A few ideas to think about…

Take a look at your XML

At first XML is intimidating. After all it is designed to be read by a computer, not humans. But I encourage publishers to dive it and to look at the files from time to time – it is the most important component of your content! In fact, good XML should be logical and readable. The best way is to open the files in a text editor (not a Word Processor). On the mac I can recommend the excellent BBEdit and on Windows I know that NotePad++ is excellent. Both are available as free versions. If your content has mathematics, then you might get some verbose MathML coding, but most other content should be reasonably readable.

Does the coding make sense?

You will be one of the few people looking at the XML code, so you might be surprised to find some bad coding or even errors! XML is supposed to be logical, so content should be tagged in a logical way. So if you find that emphasis is coded as italic, i.e. <it>very</it> rather than <em>very</em> there might be a good reason, but there is no harm in questioning it. The references are a good place to look. Does the coding make sense? A common case of questionable coding is to tag the last page of a reference in the shortened “print” form, e.g. <fpage>16350</fpage> <lpage>62</lpage>. The last page should really be tagged as 16362, even though it appears as …–62 in the PDF.

Conclusion

XML is the best format for unambiguously archiving scholarly content. But publishers need to ensure that it really is the definitive version, and it is in fact the format of record. In other words, publishers must literally have greater confidence in their XML than in their PDF. They can only be sure of this if the PDFs, and all other “readable” formats, are converted fully automatically from the XML that is archived. Having 100% reliable XML saves costs in future too because all content is accessible, and because any other formats can be produced automatically and with minimal cost.